Artificial intelligence ‘won’t solve all healthcare problems’, new report warns

- 5 February 2019

Artificial intelligence (AI) isn’t going to solve all the problems facing the healthcare sector, a new report has warned.

The Artificial Intelligence in Healthcare paper, commissioned by NHS Digital, looked at the clinical, ethical and practical concerns surrounding AI in the health and social care system in the UK.

The Academy identified seven key recommendations for politicians, policy makers and service providers to follow.

This included that such figures and organisations “should avoid thinking AI is going to solve all the problems the health and care systems across the UK are facing”.

Also the report said that claims AI can replace specialist clinicians are unlikely, but future doctors may also require training in data science.

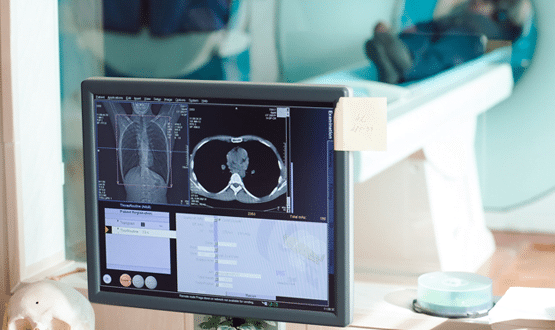

Professor Carrie MacEwan, chair of the Academy, said: “With AI starting to be used in some areas of clinical activity, such as retinal eye scans and targeting radiotherapy to treat cancer, it has been claimed the need for some medical specialists will be much reduced in the future.

“The Academy enquiry has found that, if anything, the opposite is true and that AI is not likely to replace clinicians for the foreseeable future but makes the case for training more doctors in data science as well as medicine.”

Other key recommendations included; AI must be developed in a regulated way between clinicians and computer scientists to ensure patient safety; clearer guidance around accountability, responsibility and wider legal implications of AI; data should be more easily available across private and public sectors for those who meet governance standards; and transparency of tech companies in order for clinicians to be confident in the tools they are using.

Dr Indra Joshi, digital health and AI clinical lead at NHS England, said: “We’ve got a real opportunity with AI based tech to gain time and efficiencies, but it has to be implemented in a safe and trusted way.”

AI is already used in the health sector in some capacity.

Google’s DeepMind has taught machines to read retinal scans with at least as much accuracy as an experienced junior doctor.

Other projects are underway, including a British Heart Foundation funded project to develop a machine learning tool that helps predict people’s risk of heart attack based on their health records.

[themify_box icon=”info” color=”gray”]

The Academy of Medical Royal Colleges recommendations in full:

- Politicians and policymakers should avoid thinking that AI is going to solve all the problems the health and care systems across the UK are facing. Artificial intelligence in everyday life is still in its infancy. In health and care it has hardly started – despite the claims of some high-profile players.

- As with traditional clinical activity, patient safety must remain paramount and AI must be developed in a regulated way in partnership between clinicians and computer scientists. However, regulation cannot be allowed to stifle innovation

- Clinicians can and must be part of the change that will accompany the development and use of AI. This will require changes in behaviour and attitude including rethinking many aspects of doctors’ education and careers. More doctors will be needed who are as well versed in data science as they are in medicine.

- For those who meet information handling and governance standards, data should be made more easily available across the private and public sectors. It should be certified for accuracy and quality. It is for Government to decide how widely that data is shared with non-domestic users.

- Joined up regulation is key to make sure that AI is introduced safely, as currently there is too much uncertainty about accountability, responsibility and the wider legal implications of the use of this technology.

- External critical appraisal and transparency of tech companies is necessary for clinicians to be confident that the tools they are providing are safe to use. In many respects, AI developers in healthcare are no different from pharmaceutical companies who have a similar arms-length relationship with care providers. This is a useful parallel and could serve as a template. As with the pharmaceutical industry, licensing and post-market surveillance are critical and methods should be developed to remove unsafe systems.

- Artificial intelligence should be used to reduce, not increase, health inequality – geographically, economically and socially.

[/themify_box]

2 Comments

AI is a wonderful tool and certainly will help many. It already does, for example http://www.mayamd.ai. I believe it’s best benefit will be insight. Insight for Dr’s and patients is useful if we understand it and then of course use it to enhance our lives.

This report is very welcome – AI/machine learning seems to be subject to a level of irrational exuberance right now. It reminds me of the early dotcom bubble where everyone temporarily lost touch with reality.

I’m sure these technologies will have a big impact eventually – just not as quickly and not in the way some of the early evangelists believe.

Comments are closed.